The Fastest Team

Achieving Autonomous Navigation with ROS

During the recent academic term, I had the opportunity to engage in a project where we developed a robot capable of autonomously navigating a maze. Similar to how nature’s convergent evolution often leads to a crab, robotics frequently converges on a blend of code and mathematics. This project was no exception, demanding extensive algorithm development.

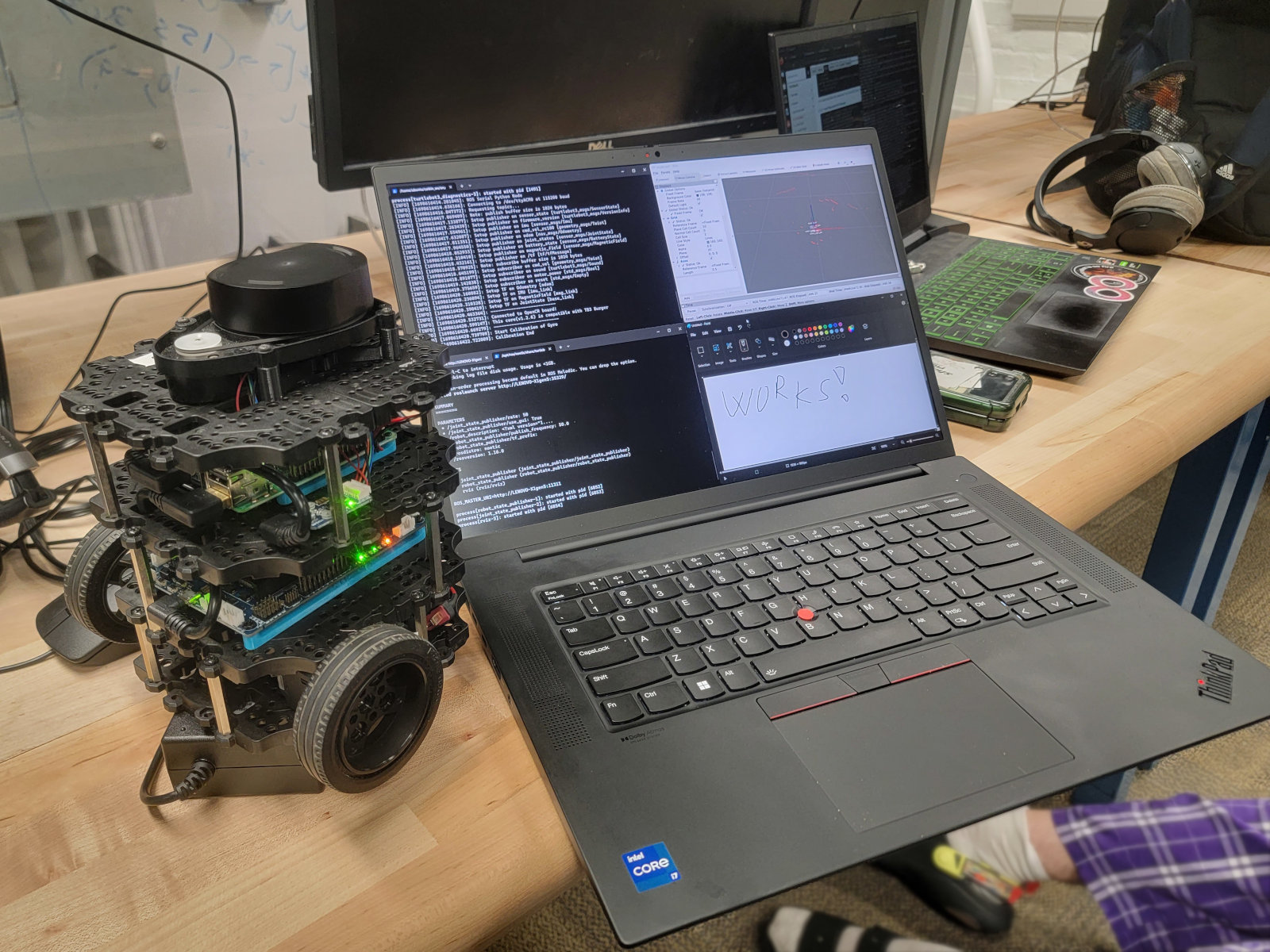

Working within a small, dedicated team, we utilized the Turtlebot3 — a modular robot equipped with a LiDAR scanner. Our objective was to program the robot using the Robot Operating System (ROS) to autonomously map an environment, return to the starting point, and then navigate to any designated location within that map. This task, known as the "kidnapping" problem, is particularly challenging as it requires the robot to re-localize itself on a pre-scanned map after being moved to an unknown position. We successfully accomplished this well within the time limit.

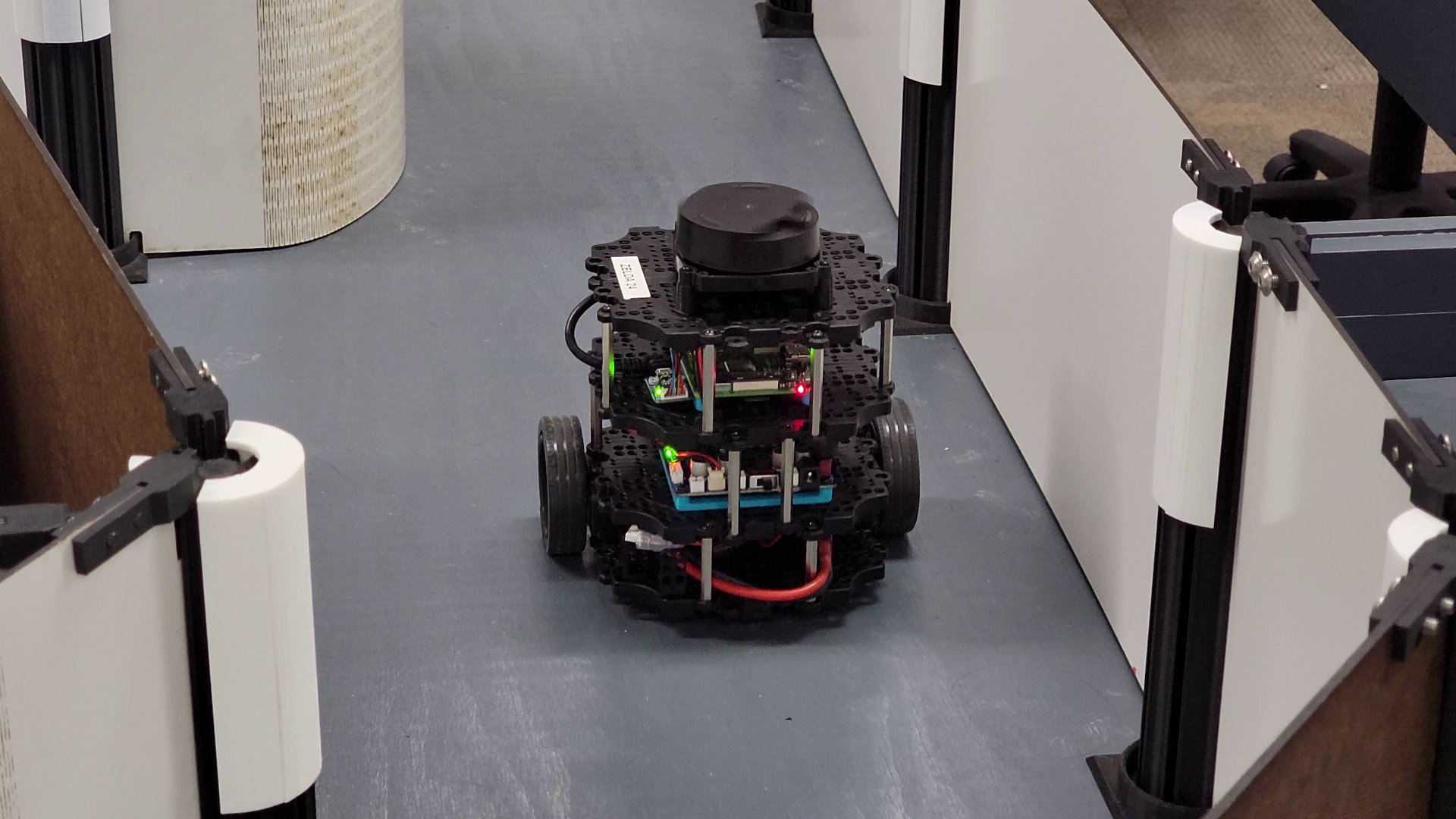

Zelda, our Turtlebot3, making its way through the maze

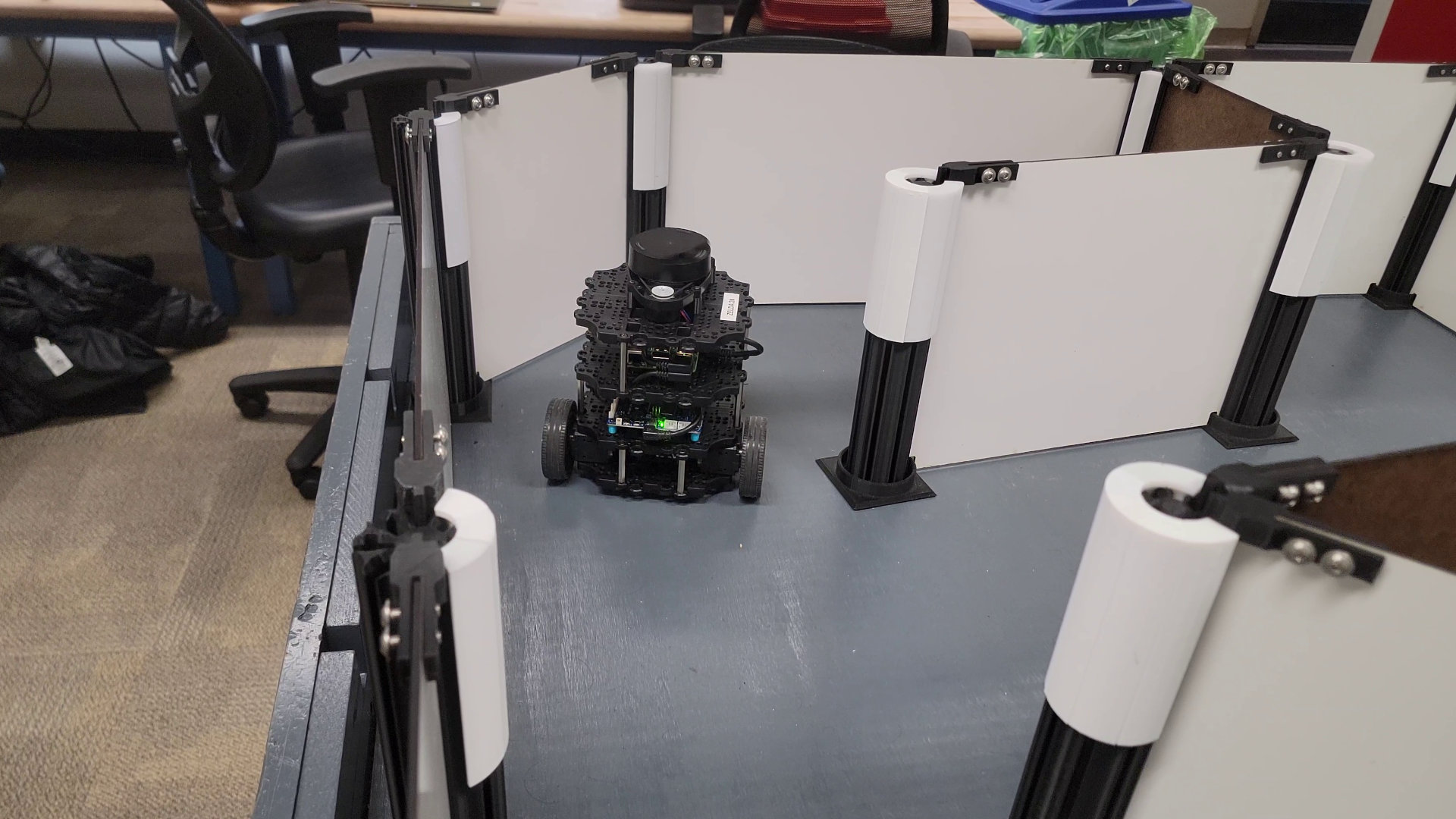

Our Turtlebot3 captured initiating a mapping run

Our team consisted of three insanely talented and dedicated members who were a pleasure to work with. The experience was both challenging and enjoyable, as we not only completed the assigned task but also used the remaining time to experiment with additional features, such as localizing without any physical movement. For every little bit we wrote custom ROS launch files. Additionally, two of us opted to run everything on Windows Subsystem for Linux (WSL) rather than the intended Linux Ubuntu, purely for the fun of the challenge and to avoid dual-booting our personal computers.

The Turtlebot3 with a LiDAR scanner, and my trusty Lenovo powerhouse

The Process in Motion Picture

Phase 1: Mapping the Environment

In this phase, the robot performed Simultaneous Localization and Mapping (SLAM) to create an accurate map of the environment. The following video captures our robot in action:

Poor robots are shy and don't like to be filmed. The pressure makes them perform poorly.

The following video is what peak performance in front of a camera looks like

Phase 2: Returning to Base

Once the environment was mapped, the robot needed to return to its starting position. Here’s a screen capture from ROS Visualization (RViz) during one of our successful mapping runs, shown at 4× real speed:

RViz screen capture during a full mapping run

Phase 3: Solving the Kidnapping Problem

The final challenge was the kidnapping problem, where the robot must determine its location after being moved to an unknown position on the map. We employed Adaptive Monte Carlo Localization (AMCL) to address this challenge. Below is a video demonstrating our robot’s successful navigation to a target point, albeit with a drive-to point placed a bit too close to the wall:

An elegant solution to the kidnapping problem using AMCL

As of the writing, our team holds the fastest completion time for this term, and by about 40 seconds. We spent 76 seconds on phases 1 and 2, and 37 seconds on phase 3, totaling 113 seconds. I am proud of what we achieved as a team.

Final project paper